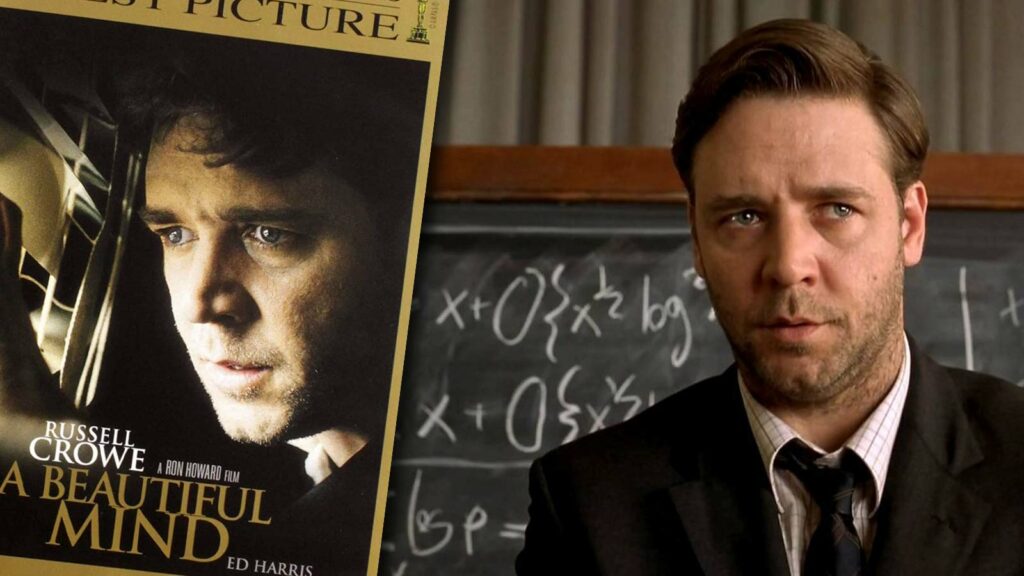

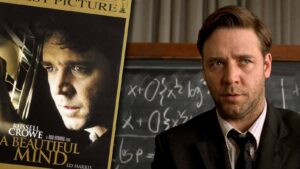

Several movies featuring brilliant mathematicians have been made over the years. The lead characters of these films have been mostly portrayed as quirky geniuses or troubled math legends. Such movies often depict the field of mathematics as an exclusive area of endeavor. Other characters in these films are shown as

3 Best Books for Learning Mathematics

You might be a student or any individual who loves reading and writing. Whether it’s reviews, essays, or stories, you have absolutely no problems writing them. They’re so easy for you wouldn’t mind helping out teens with their academic papers. However, when it comes to math, you’re extremely scared. Physics,

A Brief Overview of the Advancement of Mathematics

Before the beginning of the modern age, many mathematical discoveries took place in different parts of the world. Evidences exist in the form of structures with astonishing geometrical precision. Such accuracy wouldn’t have been possible without the knowledge of mathematics. Written records of some of these ancient mathematical developments survive

How Math Can Be Made More Interesting for Students

Teachers have often heard their students say that they hate math. Most of these students think that the subject has no significance in real life. They also come across students who say that it’s too hard. Despite this, there are certain things that teachers can do for making this subject

4 Reasons Why Many Students Hate Mathematics

You might be one of those individuals who’ve grown up hating mathematics. Many students say with great disdain that they hate math or conclude that the subject is really hard. Even you might’ve said something like this when you were a kid in school. Mathematics is one of the subjects

4 Ways to Apply Math in Your Routine Life

You must’ve certainly studied mathematics in your school. As a teenager, you might’ve wondered why math is so important. It’s still one of the prominent subjects taught in schools and is pretty much required in several fields. Be it engineering, technology, or science, it is required almost everywhere. Generally, it’s

Cracking the Online Casino Bonus Math

Every online casino out there appears to be giving all those bonuses for free. When you come across the online casino bonus, you might feel that it’s a fabulous deal. Of course, some of the bonuses that the online casinos provide are really good. However, others may not be as

5 Most Influential Mathematicians of All Time

Mathematics has revolutionized our lives in so many ways. In fact, much of the technological advancements over the years wouldn’t have been possible without mathematics. This is one of the subjects that humanity knew since the prehistoric times. Without mathematics, it wouldn’t have been possible for engineers to design great

5 Prominent Characteristics of Mathematics

According to Aristotle, mathematics is a science of quantity. Many different polymaths and mathematicians came up with their own definition of this subject over the years. These definitions were largely abandoned during the 19th century, when the study of mathematics intensified. It began dealing with some abstract topics that were